Making data-driven decisions for product optimization is practically an everyday task in the life of a product manager. As product managers, we’re familiar with testing methodologies like A/B testing, usability testing, and leveraging customer feedback to understand responses to product optimizations. These are among the most widely used practices in the industry, and their benefits have been validated time and again.

However, a question arises when decision-making needs to happen with immediacy. In scenarios like an eCommerce homepage experience or a campaign to acquire new customers, we’re dealing with fast-changing conditions where delayed results won’t cut it.

This brings up a challenge: do traditional testing methods still hold up, or do we need a more adaptive, on-foot testing methodology?

Beyond A/B testing

Dynamic content calls for dynamic testing methodologies

A/B testing and usability testing are robust methodologies, but they often operate on a "lagging" basis. By "lagging," I mean they require a waiting period before generating insights.

In usability testing, for instance, we need a prototype ready to show users, collect feedback, and then apply those insights sequentially towards product development. Similarly, A/B testing involves comparing two (or more) versions of an experience, collecting feedback, and making decisions after a designated period.

For A/B testing, it’s common practice to let variations run for at least 7 to 14 days to achieve statistically significant results. This timeframe varies based on factors such as traffic and user interactions, but the core principle remains: a variant experience needs to be live long enough to capture meaningful user responses.

In today's world where data is often dynamically generated and immediate optimization is increasingly critical, A/B testing can feel restrictive. We need a methodology that provides insights on the spot.

Enter multi-armed bandit testing

This is where Multi-Armed Bandit (MAB) testing shines. A helpful way to understand MAB is by drawing an analogy with slot machines. Imagine you’re in a casino with five slot machines.

To maximize your chances of winning, you experiment by inserting a quarter into each machine. Over time, you start allocating more quarters to the machines that pay out better. However, you never entirely abandon the other machines because any one of them might start performing better at any moment.

Now, let’s apply this concept to customer experiences. Suppose you’re running an ad for an apparel category with five different design variants - one version is minimalist, another playful, another color-focused, and so on.

Using MAB testing, you launch all five variants simultaneously, and a "smart" algorithm redirects more traffic to the winning variant in the moment, optimizing your impressions and click-through rate dynamically. With MAB testing, you’re constantly learning and reallocating traffic to maximize performance.

MAB in action: Industry-specific applications

To see how MAB testing fits into different sectors, let’s look at a few examples where this methodology has a clear edge over traditional A/B testing:

- eCommerce: In eCommerce, especially during high-traffic periods like the holidays, MAB testing can help optimize homepage banners and product showcases in what feels like near-instant time. For example, an online retailer might try out different homepage banners, each with unique messaging—say, “Holiday Deals,” “Top Gifts,” or “Free Shipping.”

With MAB, traffic dynamically shifts to the banner that pulls in the highest conversion rates, allowing retailers to maximize engagement and sales without waiting for results. This is a game-changer during time-sensitive sales periods when every second counts.

- SaaS (Software as a Service): In the SaaS world, MAB testing brings agility to the onboarding process. Let’s say a SaaS company is experimenting with different onboarding flows - like guided tours, video tutorials, or quick start guides.

MAB can automatically direct more users to the flow that’s driving the highest engagement (or showing the lowest abandonment rates), giving the company real-time feedback and enabling them to fine-tune the experience quickly. The result? A quicker path to onboarding success for new users, right when they need it most.

- Media and streaming services: Media platforms can benefit from MAB testing by optimizing content recommendations and enhancing homepage layouts. Take a streaming service, for instance: they might experiment with various content carousels, such as “Trending Now,” “New Releases,” or personalized “For You” recommendations.

MAB allows the platform to reallocate traffic to the carousels that get the most clicks, making content discovery feel more appealing and seamless. The result is a more engaging user experience that keeps viewers on the platform longer and encourages them to explore more content.

These examples show how MAB testing offers flexibility and responsiveness across industries, making it a strong fit for fast-moving environments. With MAB, product managers can deliver adaptive, high-impact experiences where it matters most.

Choosing the right methodology

A/B testing is ideal when you’re making a one-time decision or comparing significant changes to guide a future course of action. Importantly, this doesn’t mean the decision is stagnant or one-off; A/B testing can be repeated iteratively, using the same method to test new variations and drive ongoing learning.

If you have two or more distinct ideas for a feature or layout and need a controlled environment to evaluate each, A/B testing gives you a statistically sound framework to determine the best option. The methodology works well for evaluating substantial, single changes where immediate feedback isn’t critical.

MAB testing, on the other hand, is perfect for environments where continuous optimization is necessary to maximize moment-by-moment performance. When content is dynamic, and the goal is to optimize on-the-fly—like adapting campaign content or website layout to changing user preferences—MAB testing outshines A/B testing. It adapts in the moment, ensuring that you’re consistently optimizing towards the best-performing variant without waiting for a fixed testing period.

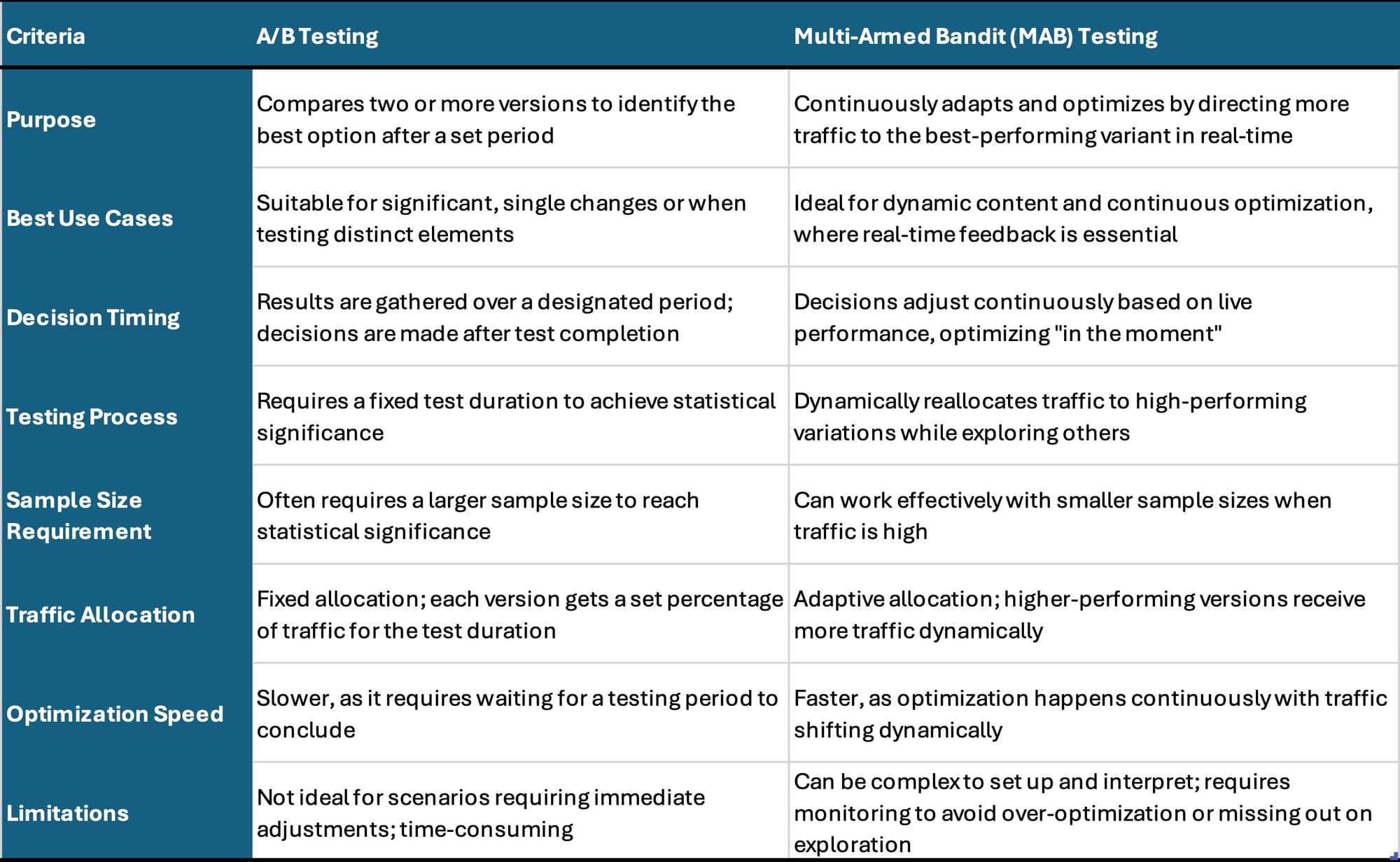

The table below highlights key distinctions between these two approaches, helping you identify the best method based on your testing goals and context.

Conclusion: Expanding our toolkit for a dynamic future

While A/B testing and other established methodologies have become industry standards for a reason, they’re part of a broader toolkit that continues to evolve as our products and customer expectations do. Each testing method - whether it’s A/B, MAB, or others, offers strengths that can support different needs in product development.

As product managers, the key is knowing when to use which approach to deliver the most impact, especially as we navigate a world where Generative AI is reshaping content and customer experiences in real time.

MAB testing adds an agile, adaptive option to our repertoire, well-suited to dynamic, rapidly evolving scenarios. It doesn’t replace A/B testing but rather complements it by offering a real-time optimization solution where continuous learning and adaptation are critical.

Looking ahead, the possibilities with AI-driven content and decision-making are virtually limitless. By staying informed and adaptable, product managers can leverage these methodologies strategically, balancing the reliability of established methods with the agility of newer, data-driven approaches.

In the end, it’s about choosing the right tool for the right job to deliver meaningful, engaging customer experiences in a rapidly evolving landscape.

Wondering how top product managers are making informed decisions that shape the success of their products in 2024? The State of Product Analytics report is here to answer that and more.

We’ve surveyed product professionals from around the world to get a real-world snapshot of how data is driving product decisions today.

Follow us on LinkedIn

Follow us on LinkedIn