My name is Nishaat Vasi, I'm a Group Product Manager at ZipCar and I'd love to share some of the learnings of being a Platform Product Manager, some of my failures, and what I've learned from that.

Product journey so far

My product journey started at MathWorks, a mathematical modeling and simulation platform. I was fortunate to be able to work with cutting-edge simulation technology, which helped AODA, Ford, some of our US defense contractors model and iterate on verification techniques.

That was product management from a B2B perspective. From there, I moved on to TripAdvisor where I was responsible for building out the content optimization required for room and hotel content. Think about APIs, how do we spruce up content? How do we massage stuff? What makes more sense to show in the shot versus someone else?

Really working on the B2C aspects of product management. Almost three years ago, I moved to ZipCar, where my team and I help evolve and manage the core member experience platform.

Think about the basic eCommerce workflow of searching and reserving a car, short-term rental, the billing payment systems, data systems, helping our marketing team manage the communications and pricing to different segments of a member.

So I look at MathWorks B2B, TripAdvisor B2C, and Zipcar is a nice mixture of both, it has the B2C and B2B aspects to it.

If I step back, what motivates or excites me about product is dealing with complex platforms. Always having that two steps ahead mentality and trying to think about what's coming next, not building for now, but building for the future.

The agenda

In this article, I'm going to be talking about an ambitious project we took on at ZipCar. It's been more than a couple of years now but this project is really about redoing the entire Zipcar platform and I'll get to that in a bit.

But before that, what is ZipCar?

How ZipCar works

ZipCar basically is the largest car-sharing network. It was built in 2000 by two MIT and Harvard alums, based out of Boston. It's hard to imagine it's been 20 years since Zipcar came to market.

At a high level, we allow you to subscribe, we have members subscribe to a service and after passing some validity checks and driver's license checks, you can go search a car you want, which is in and around your urban neighborhood, book it, drive it, can be on a per hour basis or per day basis, and you get charged for what you drive.

You pay a subscription fee, and a user charge based on what you use. It's cars on demand without the hassle of owning it, without the hassle of needing to pay for fuel - fuel is included, insurance is included and of course, parking, you need to return the car back to the same spot. We mostly exist and our markets are largely in urban areas.

The mission is how do you reduce the footprint of car ownership and through car sharing, use a car when you need it?

A few years ago, ZipCar embarked on a lofty goal, to completely overhaul the core technology.

Platform overview

Think about everything that makes this platform work, be it the in-car brains of the unit, be it the experience, be it basically everything.

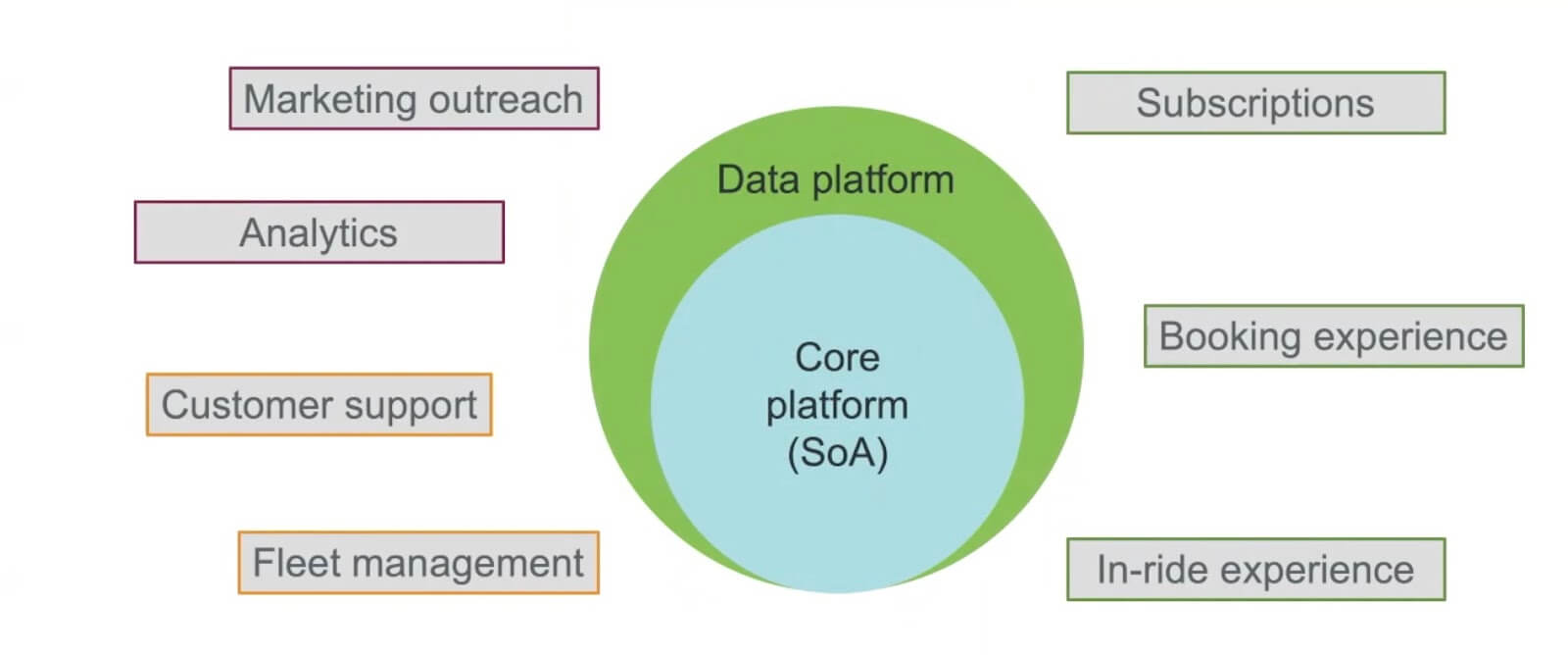

This is really trying to re-architecture 15 plus years of work. To give you an overview of what this platform looks like, this is a very simplified view.

I look at the core platform. This is again, the end state of a new platform and I'll refer to this again and again, in this article. This is the nuts and bolts of what a core service-oriented platform looks like, the end state.

There are a bunch of services, around 150 services in a typical microservices infrastructure. On there is this new data platform in AWS. Really what this core technology's supporting is a bunch of things.

Experience

On the right-hand side, you can see there's experience with your members. So the join experience for a subscription - that's top of your funnel, once you are a member, the booking experience, how do you search and reserve a car on demand?

Once you've booked the car, how do we help members navigate their way through cars, through parking rules, ensure they're being good to the next person who might want to use the car? That's all the experience part.

Internal tools and services

On the left-hand side, it's really about internal tools and services. There's this network of 10,000 cars globally, that needs to be managed on a day-to-day basis.

Our fleet operators need tools and capabilities to be able to fix a flat tire, understand when a battery is running low, go jump it, move a car from one place to the other, or answer on the phone customer questions and concerns.

There's also the aspect of how we communicate and interact with our members. This is the marketing communications, pricing strategy, and of course, the analytics, which goes with it. Segments and other parts.

In a nutshell, and very simplified, service-oriented architecture is what the goal is to get to. A bunch of member-facing or user-facing experiences, and services and internal tooling needed to be supported.

Re-platforming challenges

This comes with a fair bit of challenges, again, a multi-year project.

Biz

The first is on the business set. The easiest way to describe this is to think of a Boeing 747. It's got four engines and the task is you're mid-flight, you need to one by one, change out these engines while still maintaining the same path your flight path was on, under budget constraints.

You need to keep your business alive, but at the same time migrate over to this new technology set. The real reason is that this is based on some of the old technology functions and monolithic architectures, it gets harder to innovate over time.

Hence these microservices or service-oriented architecture is really crucial to survival.

Product experience

From a member experience perspective, the challenges in any re-platforming effort are really what is parity? There are so many features built into this product under the hood, do we need all of them?

There is always this question of what is MVP and what's not? Then when you try to understand the feature, you dig in deeper and figure out all the intertwined business logic, always having to juggle both internal tools and services with external member experience.

It's a fun balancing act.

Tech

Then of course, on the technology side, the biggest hurdle is there's always going to be a need to bridge two technology platforms. You cannot be like "One day, I'm just gonna turn the switch and go from technology A to B" there has to be a gradual transition, it's a multi-year effort.

Always involved are build versus buy decisions, what is the core technology we care about and need to own? Versus what can we buy services off?

Again, re-platforming effort, some pains involved, and here are some of the learnings over the years at ZipCar.

Build for product growth

Number one, build it for product growth.

Identify core product assets that can be reused, invest here

What I mean really is what was challenging is figuring out what are the most used features? What's the core, let's say five or 10 product assets, which you as a platform product manager need to ensure keep being nurtured?

You absolutely want to continue investing in this. You do not want to let go of too much parity work there, that's your meat and potatoes. Once you figure that out any re-platforming effort or any digital platform product, you are really designing for the future.

When I'm saying future here, it's funny, maybe four years ago, when I thought about future strategy, etc, as a product manager, I'm thinking three to five years out, that's what the long-term vision looks like. These days, especially in the mobility environment, thinking one or two years out is asking for a lot. There are so many things that change.

Every week something new is in the market, or someone's going belly up. So thinking one to two years out is asking a lot.

Push PMs to think strategically

As a platform PM, I am responsible for and found it super useful to push my other experienced product owners or product managers, for example, who own the booking experience or the join experience, on what do you need next? Or what's your vision?

Sometimes, to be honest, people are like, "I really don't care, I just need this feature done". At that point, you have to bite the bullet and be like, "Okay, I'm going to figure it out for you, and here's my best guess".

Ideation sessions

The third thing which has worked for us well is continuous ideation sessions.

Typical design thinking still applies to a platform product. I think unconstrained first, get it down to measurable outcomes, and then try to figure out from those measurable outcomes:

- What can you pick, two or three features, which are parity plus, or which are new, or which will help towards your larger vision, and connecting them back to the company's current OKRs?

Because if you don't connect it back, it's gonna be super hard to sell this idea and get investment.

Examples

A couple of examples.

Products

Last month, we launched a specific product in a market, and this is targeted at our business segment. It's interesting because my job was this product set kind of exists on our current platform, and I need to PM it through and see it through on this new platform environment.

But I look at that market and I do not understand why our other markets are not selling this, there's a business opportunity here. So I'd say more than 50% of my time was spent on trying to understand what some of the future needs might be or why we've not been able to sell to these specific other markets.

And while migrating, we've set us up such that, we've got enough of an MVP, we've got more than what was asked for, but enough for us to go sell into. That's an example of when I'm looking in the future, I'm looking maybe six months down the line at being able to sell something or maybe even two months down the line sell something which didn't exist.

Self serve issue resolution

Another example could be when you look at a self-service business, like everything for Zipcar should ideally be handled by the app. You join, you instantly get access, you can search and reserve, go up to a car hit the unlock literally on your phone, and Bluetooth unlocks the car.

There are times where a customer calls us, a member calls us and the near-term goal might be "Hey, let's try to have call avoidance strategies. How do we have chatbots? How do we have help center articles or product features to ensure a member can self serve?"

That's what I would call immediate need or parity need for a platform or re-platforming effort. But think ahead. Forcing the topic of maybe there is a way to leverage this self-service in a way that can help distribute my fleet of 10,000 cars more efficiently.

We know there's a lot of cost in fleet management, and it's costly to move a car from one place to another, especially in an urban environment. So how can we leverage these self-service techniques to better help us from a business perspective or from a solve some other vehicle management?

That's really about building for product growth, forcing the conversation, even if folks don't want to have it, making some educated guesses along the way, and really trying to ensure you don't change course every month or so.

Data models matter

The second thing I would say is data models. I am a big fan of and one thing TripAdvisor really ingrained in my head is, if it's not worth measuring, it's not worth shipping.

Part of that boils down to, once you figure out what your core product assets are, you really need a strong base or a strong data model, which from a core platform perspective can help so many different things.

Data models require concrete understanding of user pain

For example, we've built our core data models in Looker or our AWS data Mart's and instead of doing this, we could have just migrated all data which existed on Oracle on-premise into AWS and be done with it and continue using data as a second class citizen, who is only used for reporting or analytics, post-fact.

But instead, there was an opportunity to think, what's really important? We need a core member model to understand member interaction with our product. And how are we communicating with members? And then we need a core vehicle model to figure out what are the different touchpoints with a vehicle?

If we get these two, and the interaction between them right, there are so many uses of this rich data set within our tool itself.

Examples

Migrating data platforms

An example could be as we migrate stuff over we've got a snapshot in time of what a members interaction with us looks like:

- How many times has a member called us,

- What that person's driving history looks like,

- Is this person paying their parking tickets on time, etc?

This is available to our agents who are on call supporting this member calling us. Now we can again, just reinvent and be like, okay, great, we can have the data available to you.

But having these core data models in place, lets us think about, can we add more value? We already calculate and know and have a hardened set of lifetime value models, RFM, which is recency frequency monetary models. Can we use those insights to power how we interact with our customers, whether they are on call, the kind of service they get, etc?

Absolutely getting our data model right was the key to having and leveraging data, be it in real-time or non-real-time fashion, back in different product experiences.

OKRs need to span analytics, data science, product, engineering

Another key thing I'd like to highlight is one of the things we learned over time was it's really essential when you're doing any re-platforming or having a core analytics group to have shared goals across data engineering, product, data science, and data analytics.

Because everyone seems to want different things. The data PM I hired was excellent and managed to get this act together. The product manager is really the glue, who puts together the roadmap, sets OKRs, gets teams to agree to it, to really think holistically.

Really crucial for us to succeed.

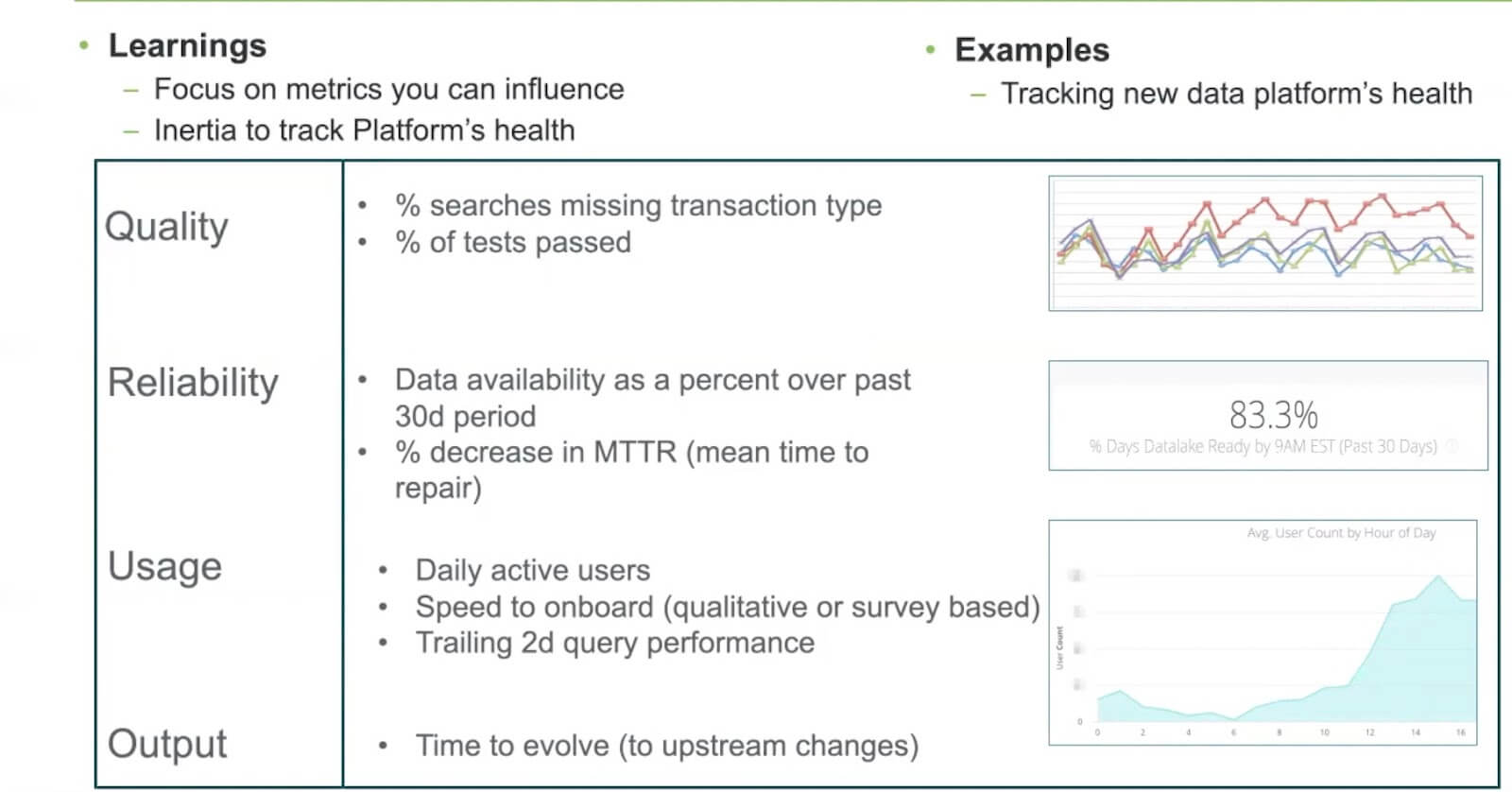

Platform metrics and OKRs

Another learning is what I call metrics. Back to my point that data matters a lot.

Initially, when we completed a couple of phases and had a stable data platform my key was how do we optimize this? If this was a search and reserve experience I know standard eCommerce metrics apply:

- What's your session conversion?

- What's your search conversion?

- Click-through rates? Etc.

- But how do you track a platform?

- How do you track a platform outside of technical terms like the number of tests which pass or failures we're seeing in production, and how do you motivate a team around it?

I kid you not, it took some amount of inertia to get people excited about this because this is something new, at least for me.

We came up with what we call data platform metrics, and this was really crucial.

Quality

There are four axes on which you can think about quality.

So say your search conversion is really important as an eCommerce measure for you. Is there anything low level in the search data which goes missing, which is missing a specific transaction type, which impacts not only my conversion and revenue but may impact how I distribute my fleet based on demand predictions?

That's crucial to get the quality right. There are measures we started using, for example, searches and completeness of search data.

Reliability

Another interesting one was data availability over the past 30 days or X days. This is interesting because we got to track how our analysts, other analytics, PMS, business analysts were using our data sets.

It was essential to get stuff in people's hands by 9am and that was our goal. 98% of the time our data should be available by 9am every day. It's interesting because when we started this off, we were closer to 75%. But just the ability to track this stuff makes a big difference.

I would almost recommend tracking things like mean time to recover, or tracking failures. The negative connotation is failures over time, mean time to recover is how fast you recover from a failure on a production issue.

Usage

The third axis is usage. What's important is how users are using it, what times of the day, what does query performance look like? Because now I can optimize my production snaps, etc. on the data platform based on usage trends within the day.

Again, we also have offices in the UK so we need to manage not only North American time.

Anyway, shorts, there's definitely some inertia, a lot of it is to do with not really being sure what metric to measure. We've learned through iterations and failing multiple times that this has been a great lens for us to look at and there's absolutely value for PMs to be engaged in these metrics as well.

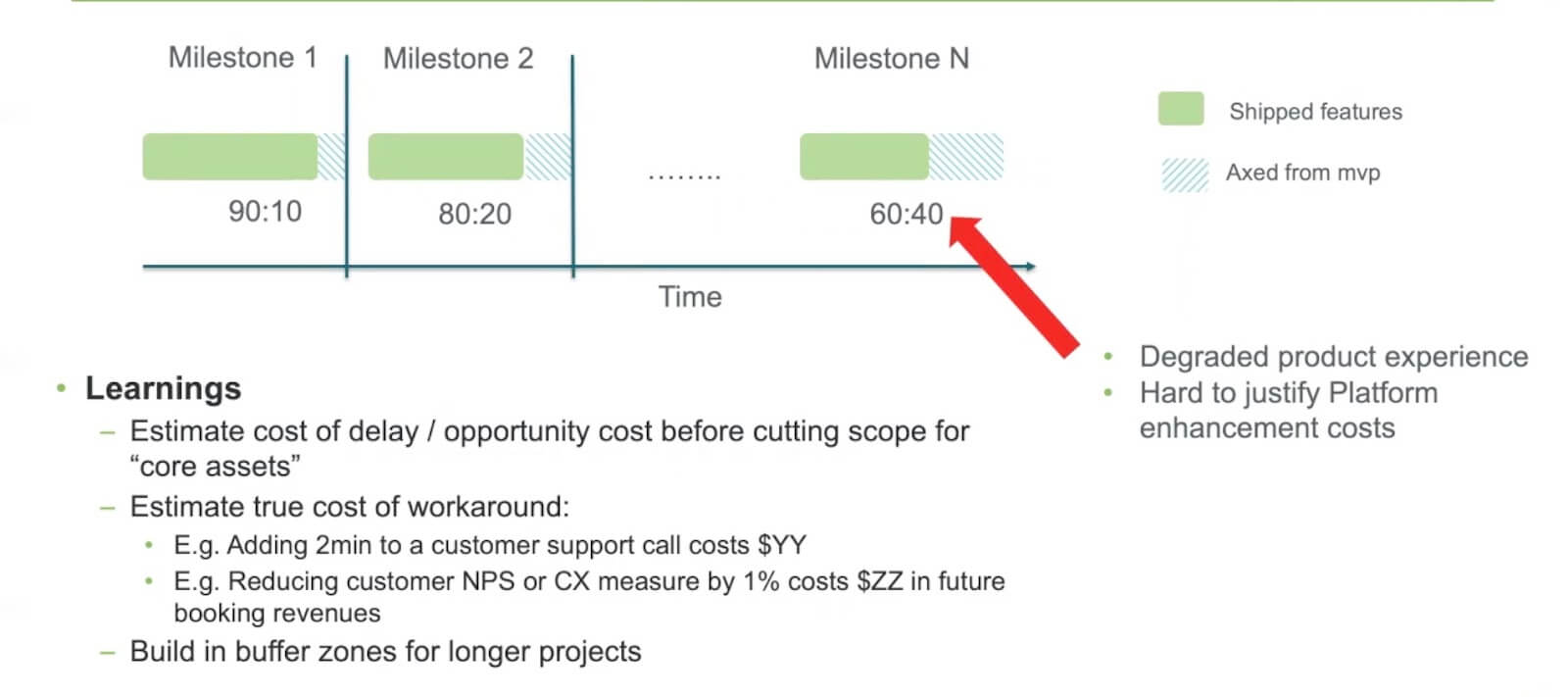

Beware of the MVP-lite trap

The last one I have is to beware of the MVP-lite trap. What do I mean by this?

I think any PM runs into this on a daily basis. If I've got 10 features I need to get out the door in two weeks, there is no way you're making all 10 features happen. Most of the times I've seen it's been a case of, which features will slip? What's really MVP, what's not?

There's no getting away from that. We had a bunch of milestones to go ahead, and as a platform PM, I think it's a really slippery slope if you start by saying 90% of my features in milestone one are covered, the other 10 will go out the door, and will slip into milestone two.

Milestone two ends up having more stuff which will slip into milestone three. Before you know it, six or eight months down the line, you might end up with a product that is 60% complete, which might be a degraded product experience to your end-users.

It's gonna be really hard, at least it was hard for me, to justify reinvestment because your leadership is like, "Hey, we just gave you all that money to go recreate this platform, why are you asking for more money to fix a platform which is not even six months old?"

As platform PMs, what I really encourage my team to do is hold the course on what you consider is your core product set. Back to my core assets piece, if you understand what that is, try to estimate the cost of delay whenever something is not going to fit or ship within your core asset base.

Think about opportunity cost. I think those two have been my best friends from a metrics perspective. And always evaluate features based on the cost of delay or similar metrics.

Another one is estimating the cost of workarounds. This goes without saying but for a platform PM, this gets more and more important over time.

Because adding two minutes to a customer support call, for example, for one feature is okay but if you start doing that for 20 different features, the number of minutes adds up and each extra minute is costing you more from not only a member experience perspective but the actual cost of the call itself.

You do not want to be in this place of death by 1000 cuts. Especially when that's what you're trying to solve with the re-platforming effort.

Another thing we've tried to do a decent job of is linking new Net Promoter scores, or customer experience measures back to revenue, or future booking estimated revenue, or probability to book. That's been another measure used.

Again, I highly encourage you to think about estimating, it's okay to take it with a pinch of salt. But having those in place will help you protect your core assets.

The other thing, of course, goes without saying is building buffer zones. From time to time, you need to do a lot of catch-up on the product side, especially for a platform.

Other gotchas

In closing a few other gotchas which I have run into overtime.

Clarify roles

Folks ask me, what's the role of a product manager versus delivery manager versus architect? I think the time it really takes to clarify these roles, especially in smaller companies, doesn't take that long.

To give you an idea, Zipcar is around 150 core product, engineering, marketing as a unit, and so it was pretty easy to come up with this. But these are lessons learned.

Really, the PM is accountable for the key result, in my view, the architect is the person who makes the product vision real, and your delivery manager is really escalating risk, managing timelines, and trying to get together with stakeholders.

It's been super useful to do that, for me at least.

Litmus test

I'd say, how do you know you've protected your core assets enough? I think one way to gauge this is if you've got a new product offering, or a brand new segment you're going after, you can actually launch this with your platform in let's say, two or three weeks without really impacting your core assets, be it your search data, or be it from my perspective, a member, or ECO model.

If I can do that, I basically know we've invested in the right place. And as a platform PM, I actually think that's a success.

Platform PMs are not ‘jack of all trades’

Last but not least, I get this a lot, my team is "Are we just too broad, are we just being a jack of all trades?", it's actually quite the opposite.

In my opinion, we are consultants. If we are doing our role well, we've gone broad enough, we filled in the gaps when other experienced PMs weren't able to, and really, this sets you up to succeed from a vision perspective.

You are the person people will likely come to first when they've got an idea and try to validate and vet, does this work? What if we do it this way instead? Could be your response?

So I think it's a great place to be and you can influence a lot.

Thank you.

Follow us on LinkedIn

Follow us on LinkedIn